Why I Stopped Building for visionOS (And What Could Bring Me Back)

Explore the limitations hindering the Vision Pro from reaching its full potential. This article highlights the missing APIs essential for transforming it into a true mixed-reality platform and discusses what needs to change for that to happen.

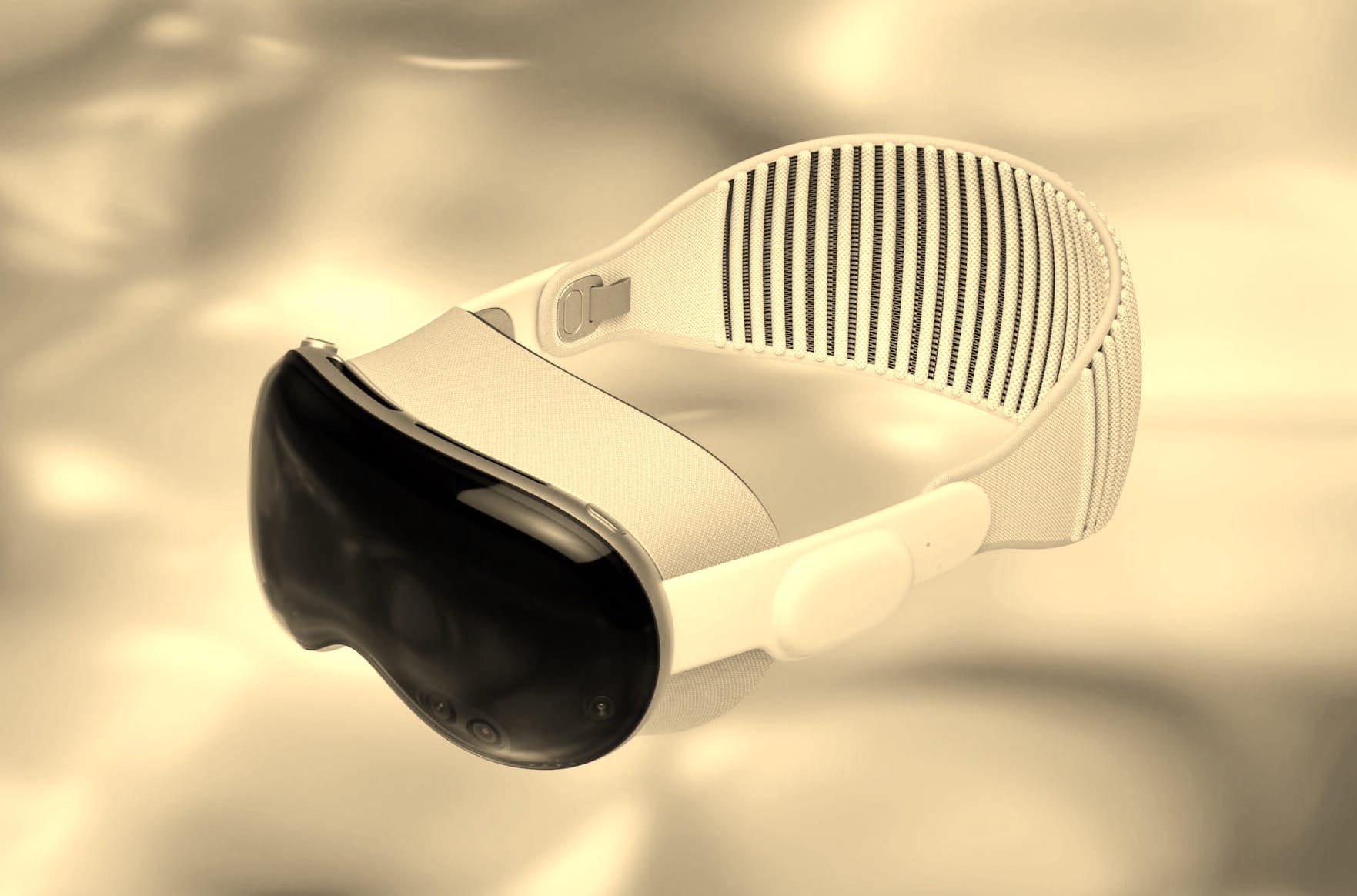

When Apple first introduced the Vision Pro, I was incredibly excited. The platform’s potential felt boundless, and as a developer, it seemed like a new frontier to explore. I jumped in headfirst, seeing a rare opportunity for experimentation and innovation. However, after releasing a few apps and working extensively with visionOS, I realized I was facing limitations that shouldn't have existed.

I had several unique app ideas, but I found myself restricted by a lack of APIs that would make those concepts feasible. I would have released more apps if visionOS had delivered the tools necessary for creating the AR/VR experiences the device promised. This isn't about wanting a more open platform like macOS; I understand the value of the security and simplicity in the iOS/iPadOS ecosystem. Yet, visionOS feels like just iPadOS with different input options. What’s missing are unique APIs designed to fully leverage the immersive experiences that the Vision Pro can offer.

Here are the five APIs that I believe could transform visionOS from a promising experiment into a platform truly worth developing for:

1. Magnetically Pinning Windows and Objects to Surfaces

Imagine being able to pin windows or 3D objects to walls or furniture in a room—permanently. This feature would allow users to create persistent setups in their environment, enabling developers to toggle the pinning feature based on the context of their experience. For instance, my Posters app would finally make sense! More importantly, these pinned objects should remain in place even after restarting the system, similar to desktop environments or focus modes. This functionality should also extend across different users—when switching to guest mode, all pinned items should stay exactly where they were placed.

Currently, augmenting reality feels temporary on the Vision Pro due to the lack of this API, making it more of a VR-only device, which I don't believe aligns with Apple's vision for the platform. This limitation restricts the full potential of mixed-reality experiences, as users cannot rely on their digital objects staying put in their physical space. Therefore, the ability to magnetically pin windows and objects is the most crucial API needed to elevate the Vision Pro beyond a VR experience and realize its true mixed-reality potential.

2. Advanced Room Scanning with Editable 3D Models

A hybrid API combining the power of RoomPlan and the 3D scanner available on iOS could revolutionize developers' ability to create immersive content. While scanning a room is currently possible, it lacks the depth required for fully interactive spaces. The next step should allow developers to capture not only the dimensions but also the colors and textures, producing high-fidelity 3D models.

Why aren’t these scanning APIs available on the Apple Vision Pro? They require a LiDAR sensor, which the Vision Pro has. Creating them on the Vision Pro would be more comfortable, allowing users to see the results in 3D immediately. Ideally, Apple could also ship an app for developers to edit these environments directly within the Vision Pro using hand gestures. For instance, if I move a table and rescan the room, the system should recognize the change and treat the table as a movable object. Apple's AI tools could fill in gaps with realistic textures and objects, making 3D environment creation more intuitive and reducing the need for complex CAD tools.

3. A Skeletal Recognition API for Fun AR Interactions

One of my favorite app ideas involved body part detection using Apple's AR frameworks to bring a bit of The Sims into the real world. Imagine walking around and seeing a floating green diamond (like the Sims' plumbob) above each person, allowing you to interact with them in unique and playful ways. To make this possible, a simple skeleton tracking API would be a game-changer, enabling developers to recognize body movements and gestures. Even a rough estimation of head position or arm movement could unlock features like interactive speech bubbles based on what someone is saying or feeling. Advanced APIs could detect facial expressions, creating opportunities for floating emotion icons or interaction suggestions akin to those in The Sims.

I understand that Apple doesn’t want to give full camera access—and honestly, I wouldn’t use the Vision Pro if they did. However, since the camera is already on, the system could share detected world information that respects user privacy while enabling these kinds of experiences. There’s still a lot of untapped potential that could enhance AR interactions significantly.

4. Interactive Spatial 360° and 180° Video Elements

While the Vision Pro promises immersive video experiences, it's currently impossible to integrate interactive buttons or objects into these spatial 180°/360° videos. Developers should be able to place clickable elements within space while inside a video—imagine offering interactive tours or guided experiences where users can click on objects to receive more information or switch between videos showing different times or perspectives.

This feature could also enable users to "time travel" within a location by seamlessly switching video recordings of the same place at different times. Right now, such interactions require painstakingly building 3D environments from scratch. A simple API for placing interactive elements within videos would vastly simplify the process and open up endless possibilities for interactive storytelling, educational tools, and more. Currently, a lot of effort is needed to come even close to such an experience, and includes building a custom video player inside RealityKit with different images for each eye. Hacks like this shouldn't be needed.

5. Discovering the World: The Future of Apple Maps

One of the most surprising gaps in visionOS is the absence of a native Maps app optimized for the Vision Pro. While Look Around offers immersive street-level views on other devices, the Vision Pro could elevate this experience by placing users directly into 3D environments where they can interact with their surroundings in real-time. Apple’s AI technology, which turns photos into 3D scenes, could be used to convert Look Around's existing imagery into interactive environments, unlocking new possibilities for immersive, location-based apps.

A proper Vision Pro Maps API could transform this experience entirely. Imagine walking (or rather beaming yourself) through a city, interacting with virtual objects tied to specific locations—whether for a game, educational purposes, or even an immersive travel planner. Developers could create experiences where users navigate through historical reconstructions, explore future city plans, or engage with dynamic content as they roam the streets.

The Key to Vision Pro’s Success

These are the kinds of APIs that would not only keep developers like me invested in visionOS but also drive the creation of more immersive and compelling content. Right now, the Vision Pro lacks the killer apps that make users want to buy it, and without enough users, developers are hesitant to invest significant time in the platform. It's a cycle that needs to be broken, and the solution is clear—Apple must make content creation as simple as possible for developers.

I’m not asking for an open system or far-fetched features—I’m asking for APIs that can unleash the real potential of the Vision Pro for developers and users alike. Without them, it’s hard to see how the platform will gain the momentum it needs. But if Apple introduces even just a few of these APIs, I'd be ready to jump back in and build apps that could push the boundaries of AR and VR, ultimately making the Vision Pro the breakthrough device it was meant to be.

A simple & fast AI-based translator for String Catalogs & more.

Get it now and localize your app to over 100 languages in minutes!